Innovation in bionic limb technology is among the big amputee trends we’re tracking this year, and one of our go-to sources of information on this subject is Bionics for Everyone. This incredibly helpful website is sort of a cross between Wikipedia and Consumer Reports. You get a mix of educational articles that explain how the technology works, updates on the most active research frontiers, and product reviews that weigh the pros and cons of commercially available devices.

Bionics for Everyone unveiled two outstanding overview pieces last month, A Complete Guide to Bionic Arms & Hands and A Complete Guide to Bionic Legs & Feet. Check them out after you’ve read the “state of the industry” article below, submitted by site founder Wayne Williams. Many thanks to Wayne for sharing this content.

As we head into 2021, we think that most of the electromechanical issues related to bionic limbs have been solved. It will be decades before they match the full nuance and dexterity of our natural limbs, if ever. But scientists and engineers now understand the mechanics of our feet, ankles, knees, hands, wrists, elbows, and shoulders sufficiently to replicate our core motions—say, about 90% of our overall mechanical capabilities. (You’ll notice I left the hips off that list.)

The real battle is being fought in the area of user control, and here the approaches are completely different in the upper-limb versus lower-limb categories. The challenge with upper limbs is to properly connect the brain to the device. But in lower limbs, the strategy has instead been to use localized sensors and microprocessors to address mobility issues independent of any connection to the brain.

Let’s examine each area separately.

Upper-Limb Bionics: The Shift to Pattern Recognition

We are on the verge of a paradigm shift in the control of upper-limb bionics. Most current arm/hand devices rely on direct-control myoelectric systems, which use two sensors and require the wearer to explicitly control every device action. Our natural limbs don’t work that way. When you lift your hand to scratch your nose, you don’t exercise conscious control over each muscle. A single thought triggers multiple actions that work together to produce rapid, fluid control.

As a result, direct-control myoelectric prosthetics have not fulfilled the ideal vision for bionic arms, with only 20 to 25 percent of users getting real satisfaction in device functionality. These devices are simply too slow, cumbersome, and awkward to work the way most users want. Your degree of satisfaction depends very much on a handful of factors, chiefly:

● The state of your residual limb. Those with natural skin and musculature intact seem to have the most success.

● The degree and success of your muscle control training.

● The compatibility between your residual limb and the particular control system used by your device.

● Your expectation levels and patience.

Recognizing these weaknesses, the industry is moving toward replacing direct-control systems with myoelectric pattern recognition. This approach uses eight sensors in conjunction with AI routines to detect patterns of muscle movement, which are then mapped to bionic arm/hand commands. The main benefit here is that you no longer have to explicitly control every single action. You just think about the action you want to take, and this results in a pattern of muscle movements in the residual limb. As long as the user trains the software to recognize these patterns, the bionic arm/hand will obey. Note that it doesn’t matter what the movements are, as long as they are relatively consistent.

The problem with this type of system is that it still uses skin surface sensors, which remain prone to signal decay because of arm position, movement, sweat, and other factors. But very recently, the makers of pattern recognition systems have started to offer software training and recalibration programs that address these shortcomings. For example, there’s a mobile app that allows users to recalibrate their pattern recognition systems on the fly.

Prosthetists are already employing pattern recognition systems for some patients. We think this trend will now accelerate and ultimately replace direct control as the mainstream control system. To read more about this technology, see our article on Myoelectric Pattern Recognition for Bionic Arms & Hands.

However, pattern recognition is not the ultimate destination. To truly connect the brain to a bionic arm/hand requires a closed-loop, two-way system combining user control with sensory feedback. This is still mostly in the research stage, though some advanced and very expensive solutions are using it commercially. To understand this technology, see our updated articles on Advanced Neural Interfaces for Bionic Hands, Sensory Feedback for Bionic Hands, and A Quick Look at Electronic Skin.

For a quick overview of the differences and advantages/disadvantages of these different types of upper-limb control systems, see our article on Bionic Arm & Hand Control Systems.

Lower-Limb Bionics: Getting Proactive

The world of lower-limb bionic control systems is completely different from that of upper-limb systems.

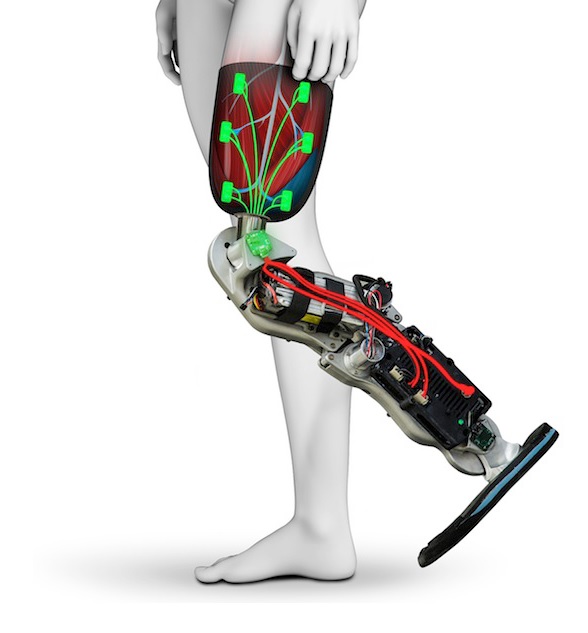

Current lower-limb control systems rely entirely on sensors for leg/joint position, angles, speed, force of impact, terrain, and so forth. Fast microprocessors adjust bionic knees in response to all this data, which can be sampled hundreds of times a second. Fine motor control of the limb is governed automatically by the chip, not by the user’s nervous system (as in upper-limb bionics). To state this contrast another way, lower-limb systems react to mobility decisions the user has already made, whereas upper-limb systems attempt to grant users proactive control.

To be perfectly honest, the lower-limb solutions have been far more effective in terms of functionality. Check out this video of a double below-the-knee amputee using a pair of Empower Ankles to run up and down stairs to see what I mean. That’s pretty close to natural capabilities!

However, it’s still a problem that these systems are essentially reactive rather than proactive. For example, when you’re approaching a set of stairs, you move the remaining muscles in your hip and residual leg, and the bionic limb has to follow along rather clumsily (because it is most often attached via socket). When the prosthetic foot touches the ground, the microprocessors kick in to react to the step that is already in the process of occurring.

It would be better if you could communicate with the bionic limb in advance of the movement. For this to work, sensors would have to be implanted in the muscles or attached to the nerves of the residual limb. As you went to take your first step up the stairs, the sensors would detect the signals to the muscles and transmit them to bionic device slightly in advance of the first step, allowing it to adjust ahead of time. This type of system would likely incorporate augmentative powered propulsion, too, something the muscle signals would both initiate and modulate in terms of the amount of power being used.

Meanwhile, the reactive processes—everything that happens after the foot hits the stair—would still take place as described above. But the overall motion would be more balanced and fluid because of the proactive actions.

An even better change would be to introduce sensory feedback. In addition to having control sensors implanted in the residual limb, these systems would also have electrodes implanted in the residual nerves to trick the brain into feeling various sensations. For example, a number of companies are developing sensory foot soles/pads that can feel the kind of surface you are walking on. The sensory information from these soles/pads would be sent to the aforementioned electrodes, allowing the user to detect that they are walking on uneven terrain or a slippery slope, as two examples.

These advanced control and sensory feedback systems are still primarily in the laboratory, but they are starting to leak into commercial products. For 2021, reactive microprocessors will continue to rule the roost in bionic legs. However, with upper-limb bionics I think the transition to pattern recognition systems will likely accelerate this year.